반응형

python에서 신경망 xor 연산 학습 샘플을 만들어 보았다.

# neural network xor

import math

import random

from datetime import datetime

import matplotlib.pyplot as plt

import numpy as np

# 네트워크의 학습 속도

LEARNING_RATE = 1.414213562

MOMENTUM = 0.25

# 훈련 데이타

TRAINING_DATA = [

[1.0, 0.0],

[1.0, 1.0],

[0.0, 1.0],

[0.0, 0.0]

]

# 타겟 값

TARGET_DATA = [

1.0,

0.0,

1.0,

0.0

]

MAX_LAYERS = 4

MAX_WEIGHTS = 9

MAX_EPOCHS = 10000

# 가중치

weights = [0. for i in range(MAX_WEIGHTS)] # [0] * 9

gradients = [0. for i in range(MAX_WEIGHTS)] # [0] * 9

error = [0. for i in range(MAX_LAYERS)] # [0] * 4 #error = np.zeros(4, dtype='f')

update_weights = [0. for i in range(MAX_WEIGHTS)] # [0] * 9

prev_weight_update = [0. for i in range(MAX_WEIGHTS)] # [0] * 9

rmse_array_error = [0. for i in range(MAX_EPOCHS)] # [0] * 10000 # 각 세대별 RMSE 에러 값

bias1 = 1.0

bias2 = 1.0

bias3 = 1.0

h1 = 0.0

h2 = 0.0

output_neuron = 0.0

derivative_O1 = 0.0

derivative_h1 = 0.0

derivative_h2 = 0.0

sum_output = 0.0

sum_h1 = 0.0

sum_h2 = 0.0

# sum_output

graph_sum_output = [[0. for i in range(MAX_EPOCHS)] for j in range(MAX_LAYERS)]

# 그래프 출력을 위한 버퍼

graph_derivative_O1 = [[0. for i in range(MAX_EPOCHS)] for j in range(MAX_LAYERS)]

graph_derivative_h1 = [[0. for i in range(MAX_EPOCHS)] for j in range(MAX_LAYERS)]

graph_derivative_h2 = [[0. for i in range(MAX_EPOCHS)] for j in range(MAX_LAYERS)]

'''

bias1 bias3

| |

w4 w8

| |

input1 --w0 --- h1 |

\ / \ |

\ / w6 |

w1 / \ |

\/ output

/\ /

w2 \ w7

/ \ /

/ \ /

input2 --w3----- h2

|

w5

|

bias2

'''

def sigmoid_function(x) :

sigmoid = 1.0 / ( 1.0 + np.exp(-x) )

return sigmoid

# 변수 초기화

def initialize():

global prev_weight_update, update_weights, gradients, error, rmse_array_error

for i in range(MAX_WEIGHTS):

prev_weight_update[i] = 0.0

update_weights[i] = 0.0

gradients[i] = 0.0

for i in range(MAX_LAYERS):

error[i] = 0.0

for i in range(MAX_EPOCHS):

rmse_array_error[i] = 0.0

def generate_weights() :

global weights

for i in range(MAX_WEIGHTS):

weights[i] = random.uniform(-1.0, 1.0)

print("weight[",i,"] = ", weights[i])

def train_neural_net() :

global rmse_array_error, error

# 세대(학습 횟수)

epoch = 0

while epoch < MAX_EPOCHS :

for i in range(MAX_LAYERS):

calc_hidden_layers(i)

calc_output_neuron()

graph_sum_output[i][epoch] = sum_output

calc_error(i)

calc_derivatives(i)

graph_derivative_O1[i][epoch] = derivative_O1

graph_derivative_h1[i][epoch] = derivative_h1

graph_derivative_h2[i][epoch] = derivative_h2

calc_gradients(i)

calc_updates()

# RMSE 에러 값

sum = math.pow(error[0], 2) + math.pow(error[1], 2) + math.pow(error[2], 2) + math.pow(error[3], 2)

rmse_error = math.sqrt( sum / MAX_LAYERS )

print("RMSE error: ", rmse_error)

rmse_array_error[epoch] = rmse_error

# 세대

epoch = epoch + 1

print("epoch:", epoch)

# 예외 처리 - 처음부터 다시

if epoch > 4000 and rmse_error > 0.3:

epoch = 0

initialize()

generate_weights()

def calc_hidden_layers(x):

global sum_h1, sum_h2, h1, h2, weights, bias1, bias2

sum_h1 = (TRAINING_DATA[x][0] * weights[0]) + (TRAINING_DATA[x][1] * weights[2]) + (bias1 * weights[4])

sum_h2 = (TRAINING_DATA[x][0] * weights[1]) + (TRAINING_DATA[x][1] * weights[3]) + (bias2 * weights[5])

h1 = sigmoid_function(sum_h1)

h2 = sigmoid_function(sum_h2)

def calc_output_neuron():

global sum_output, h1, h2, weights, bias3, output_neuron

sum_output = (h1 * weights[6]) + (h2 * weights[7]) + (bias3 * weights[8])

output_neuron = sigmoid_function(sum_output)

def calc_error(x):

global error, output_neuron

error[x] = output_neuron - TARGET_DATA[x]

def calc_derivatives(x):

global derivative_O1, derivative_h1, derivative_h2, sum_h1, sum_h2, weights, sum_output, error

derivative_O1 = -error[x] * ( np.exp(sum_output) / math.pow((1 + np.exp(sum_output)), 2) )

derivative_h1 = ( np.exp(sum_h1) / math.pow((1 + np.exp(sum_h1)), 2) ) * weights[6] * derivative_O1

derivative_h2 = ( np.exp(sum_h2) / math.pow((1 + np.exp(sum_h2)), 2) ) * weights[7] * derivative_O1

def calc_gradients(x):

global gradients, derivative_O1, derivative_h1, derivative_h2, h1, h2, bias1, bias2,bias3

gradients[0] = sigmoid_function(TRAINING_DATA[x][0]) * derivative_h1

gradients[1] = sigmoid_function(TRAINING_DATA[x][0]) * derivative_h2

gradients[2] = sigmoid_function(TRAINING_DATA[x][1]) * derivative_h1

gradients[3] = sigmoid_function(TRAINING_DATA[x][1]) * derivative_h2

gradients[4] = sigmoid_function(bias1) * derivative_h1

gradients[5] = sigmoid_function(bias2) * derivative_h2

gradients[6] = h1 * derivative_O1

gradients[7] = h2 * derivative_O1

gradients[8] = sigmoid_function(bias3) * derivative_O1

def calc_updates():

global update_weights, gradients, prev_weight_update

for i in range(MAX_WEIGHTS):

update_weights[i] = (LEARNING_RATE * gradients[i]) + (MOMENTUM * prev_weight_update[i])

prev_weight_update[i] = update_weights[i]

weights[i] = weights[i] + update_weights[i]

def save_data():

global rmse_array_error, weights

with open('errorData1.txt', 'w', encoding='utf-8') as f:

for i in range(MAX_EPOCHS):

line = '%d %s' % (i, rmse_array_error[i])

f.write(line) # f.write('\n'.join(lines))

f.write('\n') # f.writelines('\n')

with open('weight_data1.txt', 'w', encoding='utf-8') as f:

for i in range(MAX_WEIGHTS):

line = '%d %s' % (i, weights[i])

f.write(line)

f.write('\n')

def start_input():

global weights, sum_h1, sum_h2, h1, h2, bias1, bias2, bias3, sum_output, output_neuron

choice = 'y'

while True:

if choice == 'Y' or choice == 'y':

data1 = input("enter data 1: ")

if data1.isnumeric() == False :

continue

data2 = input("enter data 2: ")

if data2.isnumeric() == False :

continue

sum_h1 = (float(data1) * weights[0]) + (float(data2) * weights[2]) + (bias1 * weights[4])

sum_h2 = (float(data1) * weights[1]) + (float(data2) * weights[3]) + (bias2 * weights[5])

h1 = sigmoid_function(sum_h1)

h2 = sigmoid_function(sum_h2)

sum_output = (h1 * weights[6]) + (h2 * weights[7]) + (bias3 * weights[8])

output_neuron = sigmoid_function(sum_output)

print('output = ', output_neuron)

choice = input('Again? (y/n) : ')

else:

print('exit')

break

initialize()

generate_weights()

train_neural_net()

save_data()

start_input()

# ===============================================================

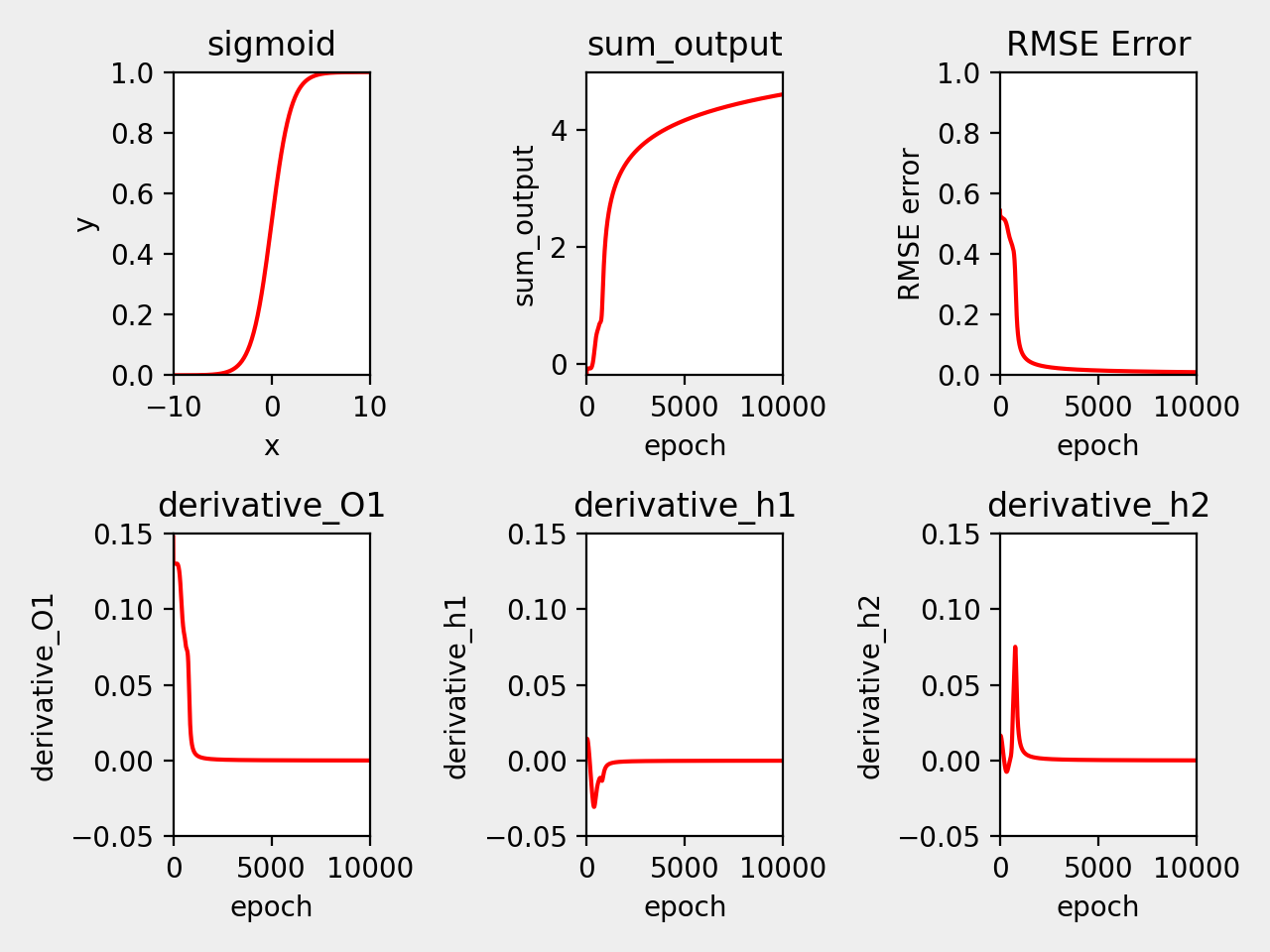

# sigmoid

x = np.arange( -10.0, 10.0, 0.1)

y = 1.0 / (1.0 + np.exp(-x))

plt.subplot(2, 3, 1)

plt.plot(x,y, 'r-')#plt.plot(x1, y1, 'r--', x2, y2, 'bs', x3, y3, 'g^')

plt.title('sigmoid')# 1.0 / (1.0 + np.exp(-x))

plt.axis([-10.0, 10.0, 0, 1.0])# xmin, xmax, ymin, ymax

plt.xlabel('x')

plt.ylabel('y')

# sum_output

x2 = np.arange( 0.0, 10000.0, 1.0)

y2 = graph_sum_output[0]

plt.subplot(2, 3, 2)

plt.plot(x2,y2, 'r-')#plt.plot(x1, y1, 'r--', x2, y2, 'bs', x3, y3, 'g^')

plt.title('sum_output')

plt.axis([0.0, 10000.0, -0.2, 5.0])# xmin, xmax, ymin, ymax

plt.xlabel('epoch')

plt.ylabel('sum_output')

# rmse_array_error

x2 = np.arange( 0.0, 10000.0, 1.0)

y2 = rmse_array_error

plt.subplot(2, 3, 3)

plt.plot(x2,y2, 'r-')#plt.plot(x1, y1, 'r--', x2, y2, 'bs', x3, y3, 'g^')

plt.title('RMSE Error')

plt.axis([0.0, 10000.0, 0.0, 1.0])# xmin, xmax, ymin, ymax

plt.xlabel('epoch')

plt.ylabel('RMSE error')

# derivative_O1

x3 = np.arange( 0.0, 10000.0, 1.0)

y3 = graph_derivative_O1[0]

plt.subplot(2, 3, 4)

plt.plot(x3,y3, 'r-')#plt.plot(x1, y1, 'r--', x2, y2, 'bs', x3, y3, 'g^')

plt.title('derivative_O1')

plt.axis([0.0, 10000.0, -0.05, 0.15])# xmin, xmax, ymin, ymax

plt.xlabel('epoch')

plt.ylabel('derivative_O1')

# derivative_h1

x4 = np.arange( 0.0, 10000.0, 1.0)

y4 = graph_derivative_h1[0]

plt.subplot(2, 3, 5)

plt.plot(x4,y4, 'r-')#plt.plot(x1, y1, 'r--', x2, y2, 'bs', x3, y3, 'g^')

plt.title('derivative_h1')

plt.axis([0.0, 10000.0, -0.05, 0.15])# xmin, xmax, ymin, ymax

plt.xlabel('epoch')

plt.ylabel('derivative_h1')

# derivative_h2

x5 = np.arange( 0.0, 10000.0, 1.0)

y5 = graph_derivative_h2[0]

plt.subplot(2, 3, 6)

plt.plot(x5,y5, 'r-')#plt.plot(x1, y1, 'r--', x2, y2, 'bs', x3, y3, 'g^')

plt.title('derivative_h2')

plt.axis([0.0, 10000.0, -0.05,0.15])# xmin, xmax, ymin, ymax

plt.xlabel('epoch')

plt.ylabel('derivative_h2')

fig1 = plt.gcf()

plt.tight_layout()

plt.show()

plt.draw()

fig1.savefig('nn_xor_derivative.png', dpi=200, facecolor='#eeeeee', edgecolor='blue')

결과 그래프는 다음과 같다.

#----------------------------------------------------------------

weight_data1.txt

0 -4.749828824761969

1 -3.5287652884908254

2 -4.747508485076842

3 -3.5276048615858935

4 1.816578868741784

5 5.375092217625854

6 -13.742230835984616

7 13.712746773928748

8 -6.530714643755955

#----------------------------------------------------------------

errorData1.txt

0 0.5424391889837473

1 0.5331220459189032

2 0.5304319567906218

10 0.5274519675428608

100 0.5198658296518869

200 0.5155776076419263

300 0.5046587144670703

400 0.4791696883760997

1000 0.09849463249517668

2000 0.03296842320700676

3000 0.02307589090605272

9999 0.010292985691909057

반응형

'개발 > AI,ML,ALGORITHM' 카테고리의 다른 글

| MNIST 데이터셋을 이미지 파일로 복원 (0) | 2023.07.19 |

|---|---|

| MNIST 데이터셋 다운로드 (0) | 2023.07.19 |

| 2D 충돌처리 (0) | 2020.12.12 |

| Generic algorithm (0) | 2020.05.19 |

| neural network (0) | 2020.05.19 |