이제 머신러닝을 위한 대량의 훈련 데이터를 만들어보자.

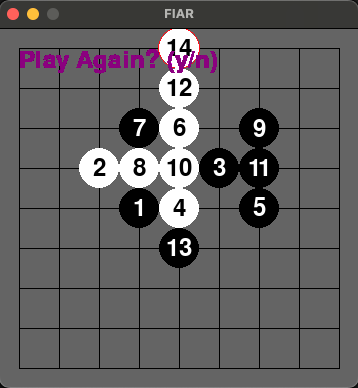

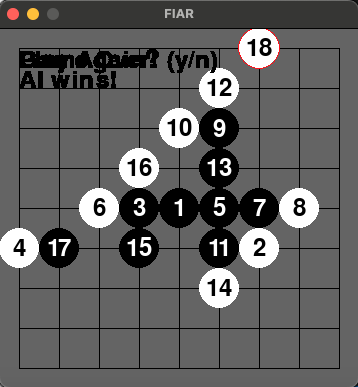

플레이어와 AI는 모두 랜덤 모드로 수를 연산하도록 설정했다. 수를 놓을 때 3자리 이하인 부분에서는,

AI는 점수를 계산하여 높은 점수의 자리에 돌을 놓도록 했다. 플레이어는 3자리 이하인 부분에서 인접한 빈자리 중 랜덤한 위치에 돌을 놓도록 난이도에 약간의 차이를 두었다. 즉, 대부분 비기거나 AI가 좀더 잘 두도록 설정하였다.

#

# FIAR

# Created by netcanis on 2023/10/29.

#

# minimax

# alpha beta pruning

# 속도 개선

# 훈련데이터 생성하여 머신러닝.

import pygame

import random

import numpy as np

import sys

import os

import tensorflow as tf

from keras.models import Sequential, load_model

import pandas as pd

BOARD_SIZE = 9 # (7,9,13,15,19)

CELL_SIZE = 40

MARGIN = 20

CLICK_RADIUS = CELL_SIZE // 2

BLACK = (0, 0, 0)

WHITE = (255, 255, 255)

RED = (255, 0, 0)

GRAY = (100, 100, 100)

NUM_ITEMS = (BOARD_SIZE * BOARD_SIZE)

PLAYER = 1

AI = -1

DIFFICULTY_LEVEL_AI = 4

DIFFICULTY_LEVEL_PLAYER = 2

AUTO_PLAY = True

if AUTO_PLAY == True:

PLAYER_MODE = "RANDOM"#"MINIMAX"

AI_MODE = "RANDOM"#"MINIMAX"

else:

PLAYER_MODE = "MANUAL"

AI_MODE = "RANDOM"#"ML"#"MINIMAX"

NUM_EPISODES = 20000

CSV_FILE_NAME = "fiar_training_data.csv"

H5_FILE_NAME = "fiar_model.h5"

class FIAR:

def __init__(self):

self.init_game()

def init_game(self):

self.episode = 0

pygame.init()

pygame.display.set_caption("FIAR")

w = (BOARD_SIZE-1) * CELL_SIZE + 2 * MARGIN

h = (BOARD_SIZE-1) * CELL_SIZE + 2 * MARGIN

self.screen = pygame.display.set_mode((w, h))

self.font = pygame.font.Font(None, CELL_SIZE-4)

def reset_game(self):

self.board = np.zeros((BOARD_SIZE, BOARD_SIZE), dtype=int)

self.sequences = np.zeros((BOARD_SIZE, BOARD_SIZE), dtype=int)

self.sequence = 0

self.game_over = False

self.turn_player = random.choice([PLAYER, AI]) if AUTO_PLAY == True else PLAYER

self.draw_board(self.screen)

pygame.display.flip()

def find_empty_cells(self):

empty_cells = []

for row in range(BOARD_SIZE):

for col in range(BOARD_SIZE):

if self.board[row][col] == 0:

empty_cells.append((row, col))

random.shuffle(empty_cells)

return empty_cells

def find_adjacent_empty_cells(self):

empty_cells = []

empty_cells2 = []

for row, col in self.find_empty_cells():

for dr in [-1, 0, 1]:

for dc in [-1, 0, 1]:

new_row, new_col = row + dr, col + dc

if 0 <= new_row < BOARD_SIZE and 0 <= new_col < BOARD_SIZE and self.board[new_row][new_col] != 0:

# 수를 놓았을 경우 연결된 수가 3개 이상이고 5개를 놓을 수 있는 자리일 경우만 배열에 추가.

self.board[row][col] = self.turn_player

c1, c2, _ = self.evaluate_position(self.board, row, col)

self.board[row][col] = -self.turn_player

c3, c4, _ = self.evaluate_position(self.board, row, col)

self.board[row][col] = 0

if (c1 >= 3 and c2 >= 5) or (c3 >= 3 and c4 >= 5):

empty_cells2.append((row, col))

empty_cells.append((row, col))

break

if len(empty_cells2) > 0:

return empty_cells2

return empty_cells

def evaluate_position(self, board, row, col):

def check_direction(dx, dy):

count = 1

r, c = row + dx, col + dy

while 0 <= r < len(board) and 0 <= c < len(board[0]) and board[r][c] == board[row][col]:

count += 1

r += dx

c += dy

return count

def check_direction_with_blank(dx, dy):

count = 1

r, c = row + dx, col + dy

while 0 <= r < len(board) and 0 <= c < len(board[0]) and (board[r][c] == board[row][col] or board[r][c] == 0):

count += 1

r += dx

c += dy

return count

total_count1 = 0

total_count2 = 0

total_score = 0

directions = [(0, 1), (1, 0), (1, 1), (-1, 1)]

for dx, dy in directions:

count1 = check_direction( dx, dy)

count2 = check_direction(-dx, -dy)

dir_count1 = count1 + count2 - 1

count3 = check_direction_with_blank( dx, dy)

count4 = check_direction_with_blank(-dx, -dy)

dir_count2 = count3 + count4 - 1

total_count1 = max(total_count1, dir_count1)

total_count2 = max(total_count2, dir_count2)

# 각 방향별로 연결된 돌의 수가 3자리 이상이고 5개이상 놓을 수 있는 자리라면 점수를 추가한다.

# 즉, 연결되는 돌이 교차하는 위치라면 점수가 높게 나온다.

if dir_count1 == 4 and dir_count2 >= 5:

total_score += dir_count1 * 4

elif dir_count1 >= 3 and dir_count2 >= 5:

total_score += dir_count1 * 2

elif dir_count1 >= 2 and dir_count2 >= 5:

total_score += dir_count1

return total_count1, total_count2, total_score

def check_winner(self, board, row, col):

if board[row][col] == 0:

return False

def check_direction(dx, dy):

count = 1

r, c = row + dx, col + dy

while 0 <= r < len(board) and 0 <= c < len(board[0]) and board[r][c] == board[row][col]:

count += 1

r += dx

c += dy

return count

directions = [(0, 1), (1, 0), (1, 1), (-1, 1)]

for dx, dy in directions:

count1 = check_direction(dx, dy)

count2 = check_direction(-dx, -dy)

total_count = count1 + count2 - 1

if total_count == 5:

return True

return False

def is_board_full(self, board):

return all(cell != 0 for row in board for cell in row)

def random_move(self, player):

if self.game_over:

return -1, -1

best_move = None

best_score = 0

best_move = self.make_optimal_move(player)

if best_move == None:

if player == AI:

empty_cells = self.find_adjacent_empty_cells()

for row, col in empty_cells:

self.board[row][col] = player

s1 = self.evaluate_position(self.board, row, col)[2]

if s1 > best_score:

best_score = s1

best_move = (row, col)

self.board[row][col] = -player

s2 = self.evaluate_position(self.board, row, col)[2]

if s2 > best_score:

best_score = s2

best_move = (row, col)

self.board[row][col] = 0

if best_score == 0:

best_move = random.choice(self.find_empty_cells())

else:

best_move = random.choice(self.find_empty_cells())

self.make_move(best_move[0], best_move[1], player)

return best_move

def minimax_move(self, player):

if self.game_over:

return -1, -1

row, col = self.find_best_move(player)

self.make_move(row, col, player)

return row, col

def make_move(self, row, col, player):

if self.board[row][col] == 0:

self.board[row][col] = player

self.sequence += 1

self.sequences[row][col] = self.sequence

#print(f"[{self.sequence}] <{player}> {row},{col}")

if self.check_winner(self.board, row, col):

self.game_over = True

elif self.is_board_full(self.board):

self.game_over = True

self.turn_player = 0

else:

self.turn_player *= -1

def minimax(self, depth, is_maximizing, alpha, beta, row2, col2):

if self.is_board_full(self.board):

return 0

if is_maximizing:

if self.check_winner(self.board, row2, col2):

return -(NUM_ITEMS - depth + 1)

else:

if self.check_winner(self.board, row2, col2):

return (NUM_ITEMS - depth + 1)

if (self.turn_player == AI and depth >= DIFFICULTY_LEVEL_AI) or \

(self.turn_player == PLAYER and depth >= DIFFICULTY_LEVEL_PLAYER):

return 0

if is_maximizing:# AI

best_score = -float('inf')

for row, col in self.find_adjacent_empty_cells():

self.board[row][col] = AI

score = self.minimax(depth + 1, False, alpha, beta, row, col)

self.board[row][col] = 0

best_score = max(best_score, score)

alpha = max(alpha, best_score)

if beta <= alpha:

break

return best_score

else:

best_score = float('inf')

for row, col in self.find_adjacent_empty_cells():

self.board[row][col] = PLAYER

score = self.minimax(depth + 1, True, alpha, beta, row, col)

self.board[row][col] = 0

best_score = min(best_score, score)

beta = min(beta, best_score)

if beta <= alpha:

break

return best_score

def make_optimal_move(self, player):

# 0. The first one is random.

if self.sequence == 0:

row = BOARD_SIZE // 2 + random.randint(-2, 2)

col = BOARD_SIZE // 2 + random.randint(-2, 2)

return (row, col)

empty_cells = self.find_adjacent_empty_cells()

# 1. ai turn: 5

for row, col in empty_cells:

self.board[row][col] = player

if self.check_winner(self.board, row, col):

self.board[row][col] = 0

return (row, col)

self.board[row][col] = 0

# 2. player turn: 5

for row, col in empty_cells:

self.board[row][col] = -player

if self.check_winner(self.board, row, col):

self.board[row][col] = 0

return (row, col)

self.board[row][col] = 0

if (player == PLAYER and PLAYER_MODE == "RANDOM") or (player == AI and AI_MODE == "RANDOM"):

# 3. The position that becomes 4 when placed

for row, col in empty_cells:

self.board[row][col] = player

c1, c2, _ = self.evaluate_position(self.board, row, col)

self.board[row][col] = -player

c3, c4, _ = self.evaluate_position(self.board, row, col)

self.board[row][col] = 0

if (c1 == 4 and c2 >= 5) or (c3 == 4 and c4 >= 5):

return (row, col)

# 4. The position that becomes 3 when placed

for row, col in empty_cells:

self.board[row][col] = player

c1, c2, _ = self.evaluate_position(self.board, row, col)

self.board[row][col] = -player

c3, c4, _ = self.evaluate_position(self.board, row, col)

self.board[row][col] = 0

if (c1 == 3 and c2 >= 5) or (c3 == 3 and c4 >= 5):

return (row, col)

return None

def find_best_move(self, player):

if AI_MODE == "RANDOM":

best_move = self.random_move(player)

else: # MINIMAX

print(f"[{self.sequence+1}] <{player}> ...")

optimal_move = self.make_optimal_move(player)

if optimal_move != None:

return optimal_move

alpha = -float('inf')

beta = float('inf')

best_move = None

best_score = -float('inf') if player == AI else float('inf')

empty_cells = self.find_adjacent_empty_cells()

for index, (row, col) in enumerate(empty_cells):

self.board[row][col] = player

is_maximizing = False if player == AI else True

score = self.minimax(0, is_maximizing, alpha, beta, row, col)

self.board[row][col] = 0

if (player == AI and score > best_score) or (player == PLAYER and score < best_score):

best_score = score

best_move = (row, col)

percentage = (index / len(empty_cells)) * 100

print(f" [{percentage:.1f}%] <{player}> {row},{col} -> {score}")

print(f" {best_move[0]},{best_move[1]} ({best_score})")

return best_move

def show_message(self, message, is_exit=False):

popup = True

while popup:

for event in pygame.event.get():

if event.type == pygame.QUIT:

pygame.quit()

sys.exit()

if event.type == pygame.KEYDOWN:

if is_exit:

if event.key == pygame.K_y:

self.reset_game()

popup = False

return

elif event.key == pygame.K_n:

pygame.quit()

sys.exit()

else:

popup = False

message = ""

self.draw_board(self.screen)

break

text_lines = message.split('\n')

for i, line in enumerate(text_lines):

text = self.font.render(line, True, (128, 0, 128))

text_rect = text.get_rect(topleft=(20, 20 + i * 20))

self.screen.blit(text, text_rect)

pygame.display.flip()

def draw_board(self, screen):

screen.fill(GRAY)

for row in range(BOARD_SIZE):# draw horizontal lines

pygame.draw.line(screen, BLACK,

(0 * CELL_SIZE + MARGIN, row * CELL_SIZE + MARGIN),

((BOARD_SIZE-1) * CELL_SIZE + MARGIN, row * CELL_SIZE + MARGIN),

1)

for col in range(BOARD_SIZE):# draw vertical lines

if row == 0:

pygame.draw.line(screen, BLACK,

(col * CELL_SIZE + MARGIN, 0 * CELL_SIZE + MARGIN),

(col * CELL_SIZE + MARGIN, (BOARD_SIZE-1) * CELL_SIZE + MARGIN),

1)

x = col * CELL_SIZE + MARGIN

y = row * CELL_SIZE + MARGIN

if self.board[row][col] == PLAYER:

pygame.draw.circle(screen, BLACK, (x, y), CLICK_RADIUS)

elif self.board[row][col] == AI:

pygame.draw.circle(screen, WHITE, (x, y), CLICK_RADIUS)

seq_no = self.sequences[row][col]

if seq_no != 0:

if seq_no == self.sequence:

pygame.draw.circle(screen, RED, (x, y), CLICK_RADIUS+1, 1)

color = WHITE if self.board[row][col] == PLAYER else BLACK

text = self.font.render(f"{seq_no}", True, color)

text_rect = text.get_rect(center=(x, y))

screen.blit(text, text_rect)

def handle_events(self):

for event in pygame.event.get():

if event.type == pygame.QUIT:

self.game_over = True

elif event.type == pygame.MOUSEBUTTONDOWN and not self.game_over and self.turn_player == PLAYER:

x, y = pygame.mouse.get_pos()

row = int(round((y - MARGIN) / CELL_SIZE))

col = int(round((x - MARGIN) / CELL_SIZE))

if 0 <= row < BOARD_SIZE and 0 <= col < BOARD_SIZE and self.board[row][col] == 0:

self.make_move(row, col, self.turn_player)

self.draw_board(self.screen)

pygame.display.flip()

if self.turn_player == AI:

best_move = self.find_best_move(self.turn_player)

if best_move is not None:

row, col = best_move

self.make_move(row, col, self.turn_player)

self.draw_board(self.screen)

pygame.display.flip()

if self.game_over == True:

if self.turn_player == 0:

self.show_message("Game draw!")

else:

self.show_message(f"Game Over!\n{'Player' if self.turn_player == PLAYER else 'AI'} wins!")

def init_ML(self):

hidden_layer_count = 3 * NUM_ITEMS

self.model = tf.keras.Sequential([

tf.keras.layers.Dense(hidden_layer_count, activation='relu', input_shape=(NUM_ITEMS,)),

tf.keras.layers.Dense(NUM_ITEMS, activation='softmax')

])

self.model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

def learning(self):

if os.path.exists(CSV_FILE_NAME) == False:

x_data, y_data = self.generate_training_data(NUM_EPISODES)

self.save_data_to_csv(x_data, y_data, CSV_FILE_NAME)

print(f"{CSV_FILE_NAME} 저장 완료")

else:

x_data, y_data = self.load_data_from_csv(CSV_FILE_NAME)

self.model.fit(x_data, y_data, epochs=100, verbose=1)

self.model.save(H5_FILE_NAME)

test_results = self.model.evaluate(x_data, y_data)

print(f"손실(Loss): {test_results[0]}")

print(f"정확도(Accuracy): {test_results[1]}")

def generate_training_data(self, num_games):

x_data = []

y_data = []

while self.episode < num_games:

self.reset_game()

x = []

y = []

while True:

row, col = self.get_next_move(self.turn_player)

x.append(np.array(self.board).flatten())

y.append(np.eye(NUM_ITEMS)[row * BOARD_SIZE + col])

self.draw_board(self.screen)

pygame.display.flip()

if self.check_winner(self.board, row, col):

#print(f"{self.board}")

break

if self.is_board_full(self.board):

#print(f"{self.board}")

break

if self.turn_player != PLAYER:

del x[-1]

del y[0]

x_data.extend(x)

y_data.extend(y)

self.episode += 1

print(f"{self.episode}: {self.turn_player} win.")

return np.array(x_data), np.array(y_data)

def get_next_move(self, player):

if (player == AI and AI_MODE == "MINIMAX") or (player == PLAYER and PLAYER_MODE == "MINIMAX"):

return self.minimax_move(player)

else:

return self.random_move(player)

def save_data_to_csv(self, x_data, y_data, file_name):

x_data_flat = [x.flatten() for x in x_data]

y_data_flat = [y.flatten() for y in y_data]

data = {'x_data': x_data_flat, 'y_data': y_data_flat}

df = pd.DataFrame(data)

df.to_csv(file_name, index=False)

def load_data_from_csv(self, file_name):

df = pd.read_csv(file_name)

x_data_flat = df['x_data'].apply(lambda x: np.fromstring(x[1:-1], sep=' '))

y_data_flat = df['y_data'].apply(lambda x: np.fromstring(x[1:-1], sep=' '))

x_data = np.array(x_data_flat.to_list())

y_data = np.array(y_data_flat.to_list())

return x_data, y_data

if __name__ == "__main__":

game = FIAR()

game.init_ML()

game.learning()

2023.10.27 - [AI,ML, Algorithm] - Gomoku(Five in a Row, Omok) (1/5) - 기본 구현 (minimax, alpha-beta pruning)

2023.10.27 - [AI,ML, Algorithm] - Gomoku(Five in a Row, Omok) (2/5) - 속도 최적화 1차 (minimax 속도 개선)

2023.10.28 - [AI,ML, Algorithm] - Gomoku(Five in a Row, Omok) (3/5) - 속도 최적화 2차 (RANDOM모드 추가)

2023.10.29 - [AI,ML, Algorithm] - Gomoku(Five in a Row, Omok) (4/5) - 훈련 데이터 생성 및 학습

2023.10.29 - [AI,ML, Algorithm] - Gomoku(Five in a Row, Omok) (5/5) - 머신러닝으로 게임 구현

2023.11.03 - [AI,ML, Algorithm] - Gomoku(Five in a Row, Omok) (5/5) - 3x3 체크 추가